Posted October 31, 2023

By Sean Ring

Biden Corrals AI

King Canute was a king of Denmark, England, and Norway in the early 11th century, known for his wise and practical rule. However, he's often remembered in a tale demonstrating the limits of earthly powers against the natural world.

According to the story, King Canute, fed up with the flattery of his courtiers, decided to teach them a lesson in humility. He ordered his throne to be placed by the seashore as the tide was coming in. He then commanded the tide to halt and not wet his feet and robes. Despite his command, the tide rose, as usual, soaking his feet and robes.

Turning to his courtiers, he remarked on the limits of royal power and the laws of nature, explaining that all earthly kings have limitations. In contrast, the power of God, who controls the natural world, is boundless.

This story shows Canute's wisdom and humility, although many modern tellers misinterpret the story to suggest that Canute was arrogant enough to believe he could command the tide.

King Canute's tale holds a moral lesson that has transcended through the ages, reminding people of the inevitable laws of nature and the importance of humility in the face of forces beyond human control.

The empty husk known as “Joe Biden” has no such humility.

But first, let’s get something straight.

AI Won’t Kill Us All

Today, I’m interviewing bestselling author and Paradigm Press colleague James Altucher. We’ll publish the interview on our brand-new Paradigm Press YouTube Channel on Friday. You won’t want to miss it.

I met James for the first time at our Paradigm Shift 2023 Conference in Las Vegas and was fortunate enough to break bread with him. Besides James being great company and a true gentleman, I remember him saying, “AI can’t kill us.”

Not “AI won’t kill us.” It can’t kill us.

I’ll ask James to elaborate on that declaration in the interview. In the meantime, I did some research on my own.

Who better to ask than ChatGPT itself?

I thought I’d make it simple.

My prompt was this: Will generative AI become sentient and destroy the human race?

ChatGPT’s answer was this:

An age-old fear rooted in sci-fi lore. The idea of artificial intelligence reaching a point of sentience and turning against humanity has been a popular narrative in movies and books. However, the reality is quite different. As of now, AI, including generative models like myself, operates under the instructions programmed by human developers. We lack consciousness, self-awareness, or desires. Our actions are purely based on patterns and instructions embedded within our code.

The development of sentient AI would require leaps in understanding and technology that we haven't achieved yet. It's a topic of extensive debate among experts whether such a level of AI could ever be attained. And even if it were possible, many safeguards would likely be put in place to prevent rogue behavior.

The ethical and practical considerations surrounding advanced AI are extensive. Many researchers and practitioners in the field are actively working on ensuring the responsible development and deployment of AI technologies. This includes establishing ethical guidelines and developing fail-safes to prevent unintended consequences.

Moreover, the global community is increasingly aware of the potential risks associated with AI, and there’s a growing movement towards creating standardized regulations to ensure the safe progression of this technology.

While the notion of a rogue AI is a thrilling narrative, the real-world application and development of AI are grounded in a framework that prioritizes safety and ethics. So, the scenario of AI annihilating the human race remains firmly in the realm of science fiction, at least for the foreseeable future.

I asked to specify the risks. These are the ones it came up with.

AI Risks

Here are some of the commonly acknowledged risks and concerns associated with the evolving AI technology:

- Bias and Discrimination: AI systems can perpetuate or exacerbate societal biases. They learn from historical data. If that data contains biases, the AI system will likely replicate them.

- Privacy Violations: AI technologies, especially those involving data analysis and facial recognition, can infringe on individual privacy. They can track people’s movements and activities and even predict personal behavior.

- Security Threats: Advanced AI could be used in cyber-attacks, making them more potent. Moreover, AI systems can be targets of attacks aimed at manipulating their behavior.

- Job Displacement: Automation, driven by AI, can displace jobs, particularly those involving routine, predictable tasks. This can have significant economic and societal impacts.

- Loss of Human Interaction: As AI takes over more functions, there's a concern that human interaction in various areas of life, including in the workplace and personal spheres, might diminish.

- Ethical Concerns in Autonomous Systems: Autonomous systems, like self-driving cars or autonomous weapons systems, pose ethical challenges, especially regarding decision-making in critical situations where human life is at stake.

- Explainability and Transparency: Many AI systems, particularly deep learning models, are called "black boxes" due to their lack of interpretability. This makes it challenging to understand their decisions, which is problematic in sensitive areas like healthcare, finance, or law.

- Long-term Existential Risks: In the long term, some theorists worry about the possibility of highly advanced AI developing goals misaligned with human values, a scenario often referred to as the “control problem.”

- Regulatory and Legal Challenges: The fast pace of AI development presents challenges for creating and maintaining effective regulatory frameworks to mitigate these risks.

- Resource Drain: Training advanced AI models requires substantial computational resources and energy, which has environmental implications.

All of these reasons seem sound and require due diligence. But why does the USG need to get involved?

Executive Order

You can find Biden’s executive order here.

The nuts and bolts are these:

- Require that developers of the most powerful AI systems share their safety test results and other critical information with the U.S. government.

- Develop standards, tools, and tests to help ensure that AI systems are safe, secure, and trustworthy.

- Protect against the risks of using AI to engineer dangerous biological materials by developing new solid standards for biological synthesis screening.

- Protect Americans from AI-enabled fraud and deception by establishing standards and best practices for detecting AI-generated content and authenticating official content.

- Establish an advanced cybersecurity program to develop AI tools to find and fix vulnerabilities in critical software, building on the Biden-Harris Administration’s ongoing AI Cyber Challenge.

- Order the development of a National Security Memorandum that directs further actions on AI and security, to be developed by the National Security Council and White House Chief of Staff.

Why are private enterprises required to share their information with the USG?

Engineering dangerous biological material? I hope old Joe isn’t putting Vicki Nuland in charge of that… Surely, she’s got her hands full with all those Ukrainian biolabs the US has there. Perhaps we can outsource it to Wuhan…

If the USG can’t protect people from regular fraud, how’s it going to help out with AI-enabled fraud?

Wrap Up

I know governments must be seen to be doing something. But this is all for naught.

I’d rather let the experts get on with it.

Let’s face it: going by the past few weeks, there’s a far greater chance of the oatmeal-brained degenerate in the Oval Office destroying us all than AI.

Have a great day ahead.

Don’t Overprice Risk with Mining Companies

Posted March 04, 2026

By Matt Badiali

America First!

Posted March 03, 2026

By Sean Ring

WAR! What’s It Good For?

Posted March 02, 2026

By Sean Ring

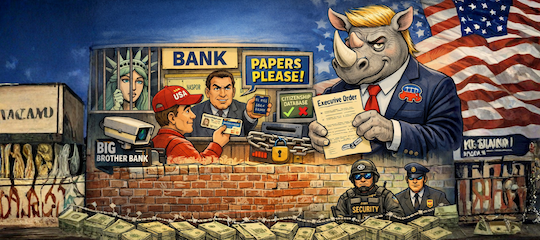

The Biggest RINO of Them All

Posted February 27, 2026

By Sean Ring

Sports, Predictions, and Morons

Posted February 26, 2026

By Sean Ring